Not all sound waves are created equal - even two similar signals will display slight differences in loudness and tone. Some sound waves, however, are identical (or nearly the same), and when they're stacked on top of one another, audio phasing can occur. But what is audio phasing, exactly?

Audio phase is one of those phenomena that can be tricky to wrap your head around. Once you’ve got a grip on it, though, you’ll be able to take your productions to a whole new level. In this article, we'll go over the basics of audio phase, why it matters, and how to address phase issues in your productions.

What is "Phase" in Audio?

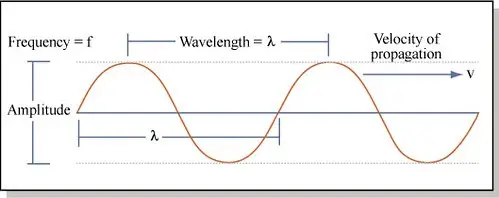

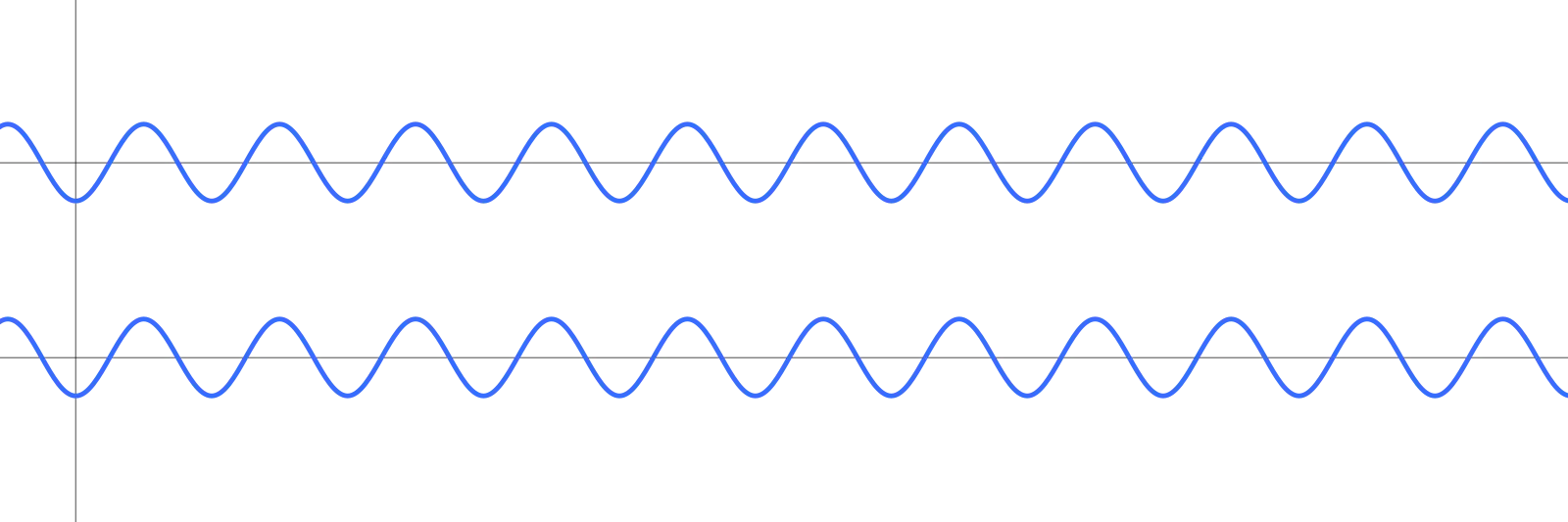

Audio phase indicates a point in time within a given sound wave. Sound waves are constructed of three main components: amplitude, wavelength, and frequency:

The amplitude refers to the loudness of the wave at a particular point in time; for a perfectly symmetrical and repetitive sound wave (like the sine wave shown above), wavelength measures the distance between two equal amplitudes along the cycle; and frequency (a.k.a. “pitch”) is the number of times per second the sound wave repeats itself along that cycle.

The phase of a sound wave tells us where exactly along this cycle we’re looking. In audio production, the relationship between two or more waveforms is what really matters; the absolute phase of a singular sound wave isn’t all that relevant for reasons we’ll discuss next.

Why is Phasing Important?

Audio mixing is all about combining distinct but cohesive elements so that every component can be heard as intended by the artist, producer, and engineer. As such, you’ll be juggling countless sound waves that each vary in frequency, amplitude, harmonic overtones, and more.

What’s bound to happen is that some waves will go in and out of phase with one another at different points in time. When two signals are “in phase” with one another, their amplitudes (i.e., peaks and troughs) coincide. Understanding phase is crucial for optimizing your mixes. Phase problems are at the root of many mixing problems and have major implications on the overall sound.

When Waves Collide

To keep things simple, imagine two perfectly symmetrical and repetitive sine waves, one in the left channel and one in the right. When both halves are perfectly aligned, their amplitudes are identical across time, meaning you would hear the same sound on both sides.

Bring those channels together and play them at the same time, and you’ve got what’s known as “constructive interference” because the combination of these in-phase waves doubles the resultant amplitude. Conversely, if these channels were perfectly “out of phase” (i.e., the lowest amplitude of one channel’s wave occurs when the other channel’s wave is at its loudest), their peaks and troughs would cancel each other out. This is called “destructive interference” or “phase cancellation.”

How Phase in Audio Works in Practice

The precise scenario described above isn’t common in the real world since these perfect, fundamental sound waves won’t be what you’re working with, but the theory still applies. Whether you’re recording a single instrument or multiple instruments with any number of microphones, phasing will be a factor that shouldn’t go ignored.

Phase interactions also come into play when layering samples over acoustic drums, using different plugins on similar tracks, applying parallel processing, and more.

Simply put, audio phase is a factor whenever two or more signals are combined - the more related those signals are, the more relevant phase becomes.

If you’re recording an instrument with two separate microphones (stereo recording), the incoming fundamental frequencies (i.e., the notes played) will be the same in each channel.

However, because each microphone is in a unique spatial position, different overtones will enter each mic at a different time. As a result, each channel’s sound waves will be similar in some respects but different in others.

Different frequencies may get boosted, diminished, or virtually canceled depending on the phase relationship between the two channels. As you might imagine, introducing another microphone or more into the fold makes things even more complicated, increasing the odds of phase issues.

And if two mics are facing the opposite direction from one another, one of them must have its phase reversed to combat audio cancellation (i.e., silence).

The Drum Dilemma

When it comes to recording drums, phase problems often run rampant. After all, most modern drum recordings involve a minimum of 5 mics (or as many as 20) to capture each component, the entire kit, and room reflections.

It also doesn’t help that cymbals resonate at high frequencies or that a snare and kick drum often require two mics to capture their lower and higher frequencies. If you’re not strategic with your mic placement/configuration, your initial drum kit recording can become a mess and nearly impossible to mix into submission.

Fortunately, the handy “phase flip” (polarity reversal) switch featured on several modern microphones can quickly resolve phase cancellation problems during the recording process , whether you’re tracking drums, acoustic guitar, or anything else.

Reflecting on Phase

Stereo miking isn't the only culprit of phase problems. You can run into phase issues even if you’re only recording into one channel, especially if your recording space isn’t properly treated . Sound waves easily bounce off acoustically reflective surfaces. These reverberations essentially duplicate the initial sound, sending back another, quieter, and tonally distinct version of it after a certain amount of time, depending on your proximity to the surface, the room’s size, and shape, etc.

If the timing of these reflections is just right, destructive or constructive interference may occur when captured by your microphone, altering the resulting tone and volume. Using delay and reverb effects intentionally can also cause phase problems.

To make matters more complicated, you might hear phase problems when playing back a recording, even if your recordings themselves don’t have phase issues. This issue can occur if your speakers themselves are “out of phase,” i.e., wired with improper polarity.

How to Find Audio Phase Issues

As your ear develops, you'll be able to hear phasing when it occurs. Of course, the human ear alone can't easily identify all phase issues, so additional tools and techniques come in handy.

Listening to your mix (and particular tracks) in mono rather than stereo can reveal certain phase problems. If, upon condensing your mix into mono, you notice the sound has become duller or thinner, you might be facing phase cancellation.

Likewise, if the signal disappears from the center but remains on the left and right channels when mixing in mono, you're likely facing out-of-phase audio. You can also identify audio phase problems with visual plug-ins designed with phasing in mind (we'll delve into these more in the next section).

How to Fix or Prevent Phase Issues

With so many potential sources for phase problems, it’s crucial to arm yourself with knowledge, tricks, and tools that help you prevent and resolve such difficulties.

Know the 3:1 Ratio for Mic Placement

This method applies when working with two microphones; the second mic should be placed three times further from the initial mic as the initial mic is from the audio source being recorded. If one mic is six inches from the guitar’s sound hole, you should set up the second mic 18 inches (1.5 feet) away from the other mic.

This trick doesn’t always work, and some adjustments may be needed, but it’s a good starting point for minimizing phase issues when recording with two microphones.

The Mid/Side recording technique is designed to minimize potential phase problems.

Mix in Mono

It might seem counterintuitive to mix in mono, considering most tracks will be listened to in stereo. However, as mentioned above, some instances of phase cancellation can go unnoticed when listening back in stereo - bringing your tracks into mono at various intervals while mixing can reveal phase problems you might have otherwise missed.

Simply put, mixing in mono can help you further contextualize your mix as a whole and help you clear up any muddiness before returning to stereo sound.

Use Audio Phase Plug-ins

On top of proper mic placement and mixing in mono, you can also use various plug-ins to fix phase interference and easily visualize what’s going on in these moments.

Fortunately, there’s no shortage of phase-fixing implements available these days. Some prime examples include Waves’ InPhase plug-in, Little Labs’ In-Between Phase, and Eventide Precision Time Align.

And if you get good enough at recognizing phase issues with your ear, simply moving tracks a tiny bit to the left or right can also fix phase issues. This trick won’t always work, especially if your track sticks strictly to the grid.

Move Waveforms Around

Perhaps the most basic way to address phase problems is to simply move similar waveforms into their proper place. If two waveforms of similar signals aren't aligned, phasing is bound to occur, so simply shifting one of them to the left or right on the timeline can work as a quick fix.

There are even plugins available that will automatically line up waveforms to save you the hassle - Sound Radix's Auto-Align plug-in and Melda Productions' MAutioAlign are two popular alignment choices.

Using Phase to Your Advantage

So far, we’ve mainly discussed phase interferences as problems to be solved. In truth, phase cancellation isn’t inherently a bad thing but merely an acoustical artifact that you can manipulate in several ways. Sure, steering clear or correcting phase cancellation is one way to deal with this phenomenon.

However, if you know what you’re doing and what you want from your mix, you can use phase cancellation as another mixing tool.

For example, manipulating the phase relationship between guitar tracks can allow you to shape the resultant track’s tone (the same goes for the tone of any instrument or vocal), akin to a filter EQ. Specific devices (i.e., Neve Portico 5016 and Phazer by Radial) contain phase-shifting circuits that let you pick and choose certain frequencies you want to boost while still canceling out for unique tone-shaping possibilities.

Summing Up: It’s Just a Phase

We all go through phases, and so does every sound wave out there. As such, phase concerns will appear in any production you work on. What’s important is understanding why these interferences occur and what to do about them.

The more you understand and pay attention to audio phase, the better your mixes will be, and the fewer surprises you’ll run into when collapsing a stereo track into mono. So be vigilant, use the knowledge and tools available to you, and try not to get fazed by phase.

Phase FAQs

Still have questions about audio phase? Let's answer some frequently asked questions.

What is Phase Music?

Phase music intentionally uses the properties of phase as a compositional tool. Phase music often incorporates minimalistic, similar sounds (i.e., notes) with slight variations in frequency, tone, and/or tempo to create effects such as echo , delay, flanging, phasing, and more.

What is Phasing in Mixing?

During the mixing process, phasing may occur when there is a slight time delay between identical or related signals. This phasing can result in unwanted changes in tone and volume, but can also be used in creative ways.

How do you Diagnose Phasing Problems?

You can identify phase concerns in your music by developing your ear, mixing in mono, and using plug-ins designed to catch phasing.

What is Comb Filtering?

Comb filtering is a type of phasing that occurs when a signal is added to itself in a short span of time, resulting in both constructive and destructive interference - it usually occurs due to room reflection and/or during stereo recording. This phenomenon derives its name from its resemblance to a hair comb.

Can the Human Ear Hear Phase?

While the human ear can't pick out the absolute phase of a waveform, it is sometimes sensitive to relative phase. For instance, many people will notice an audible shift when two identical sine waves are combined (as this creates a louder noise) or when a phaser effect is added to a signal.

How do you know if two Waves are in Phase?

Visually, two waveforms are in phase when their amplitudes (peaks and troughs) are lined up on a timeline.